Building Your Very Own Digital Alfred

Imagine having your very own digital assistant—a personal butler, not in a tux, but right at your fingertips, ready to help you code, research, and manage your projects. That’s exactly what we’re creating here: Alfred, your AI-powered, personal development butler.

Whether it’s opening programs with a simple command, providing summaries of research topics, or even helping maintain your development environment, Alfred’s goal is to streamline your workflow. No more distractions, no more endless browser tabs—just a focused assistant who understands your needs. Think of it as your productivity partner that learns as you grow.

Reason and Goal:

- Get an AI-powered helper to speed up programming and research tasks.

- Use natural language to open programs instantly.

- Automate project management: open VS Code, manage Git, integrate code generation tools effortlessly.

- Summarize research, find papers, and explore content on YouTube or Reddit.

- Boost productivity by integrating AI tools like Aider.

Long-Term Vision:

The ultimate goal is for Alfred to understand your needs intuitively—whether you’re developing projects or diving deep into research. Imagine running this tool from a USB stick, with full voice control activated by saying, „Hey Alfred.“ It should be seamless, always running in the background, gathering information for you and only stepping in when you ask for it—keeping you focused on what matters most.

Fun fact: The agent is named Alfred, like Batman’s butler. It stands for Automated Logic and Functionality for Research, Engineering, and Development.

Methodology and Tools Used:

All code is written in Python, supported by ChatGPT and Claude as our co-pilots.

The project is a work in progress, and the code is publicly available on Github.

In this blog post, we’ll start with something simple yet powerful: building an interactive chat window that lets you communicate with Alfred through either the Claude API or the OpenAI API.

Interactive Chat Window

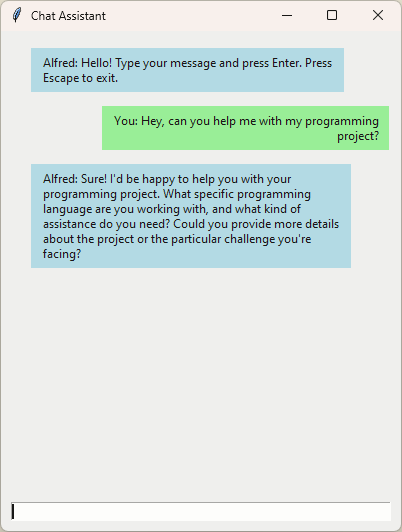

The first step was to create a simple chat window to interact with the AI agent.

To generate the code, I used ChatGPT. The first version had some flaws and only displayed text without any real structure. So, I made some improvements—adding message boxes, aligning user messages to the right and assistant messages to the left.

I also removed a few clunky buttons that added messages or closed the program, making the UI cleaner and more intuitive.

The current version looks like this:

And here’s the AI-generated code:

class ChatAssistant:

def __init__(self, root):

self.root = root

self.root.title("Chat Assistant")

self.root.geometry("400x500")

self.root.attributes('-alpha', 0.9) # Set window transparency

# Container for chat messages

self.chat_frame = tk.Frame(self.root, bg="#f0f0f0")

self.chat_frame.pack(padx=10, pady=10, expand=True, fill='both')

# Scrollable frame for chat bubbles

self.canvas = tk.Canvas(self.chat_frame, bg="#f0f0f0", highlightthickness=0)

self.scrollbar = tk.Scrollbar(self.chat_frame, orient="vertical", command=self.canvas.yview)

self.scrollable_frame = tk.Frame(self.canvas, bg="#f0f0f0")

self.scrollable_frame.bind(

"<Configure>",

lambda e: self.canvas.configure(scrollregion=self.canvas.bbox("all"))

)

self.canvas.create_window((0, 0), window=self.scrollable_frame, anchor="nw")

self.canvas.configure(yscrollcommand=self.scrollbar.set)

self.canvas.pack(side="left", fill="both", expand=True)

self.scrollbar.pack(side="right", fill="y")

# Enable mouse scrolling

self.canvas.bind_all("<MouseWheel>", self._on_mousewheel)

# Entry widget for user input

self.entry_widget = tk.Entry(self.root)

self.entry_widget.pack(padx=10, pady=10, fill='x')

self.entry_widget.bind("<Return>", self.process_input)

self.entry_widget.bind("<Escape>", lambda e: self.root.quit())

self.log_file = "assistant.log"

self.chat_history = [

{"role": "system", "content": "You are a helpful assistant."},

{"role": "assistant", "content": "Hello! Type your message and press Enter. Press Escape to exit."}

]

self.add_message("Alfred", "Hello! Type your message and press Enter. Press Escape to exit.")

def _on_mousewheel(self, event):

self.canvas.yview_scroll(-1 * (event.delta // 120), "units")

def process_input(self, event):

user_input = self.entry_widget.get().strip()

if user_input:

self.add_message("You", user_input, right=True)

self.log_message(user_input)

self.chat_history.append({"role": "user", "content": user_input})

# Call GPT-4o to get response

assistant_response = self.chat_with_gpt_4o()

self.add_message("Alfred", assistant_response, right=False)

self.log_message(f"Alfred: {assistant_response}")

self.chat_history.append({"role": "assistant", "content": assistant_response})

self.entry_widget.delete(0, tk.END)

def add_message(self, sender, message, right=False):

bubble_frame = tk.Frame(self.scrollable_frame, bg="#f0f0f0", pady=5)

bubble_label = tk.Label(

bubble_frame,

text=f"{sender}: {message}",

padx=10,

pady=5,

wraplength=300,

justify="right" if right else "left",

bg="#add8e6" if sender == "Alfred" else "#90ee90",

anchor="e" if right else "w"

)

bubble_label.pack(anchor="e" if right else "w", padx=(50 if right else 10, 10 if right else 50))

bubble_frame.pack(anchor="e" if right else "w", fill='x', padx=10, pady=2)

self.scrollable_frame.update_idletasks()

self.canvas.yview_moveto(1.0)

def log_message(self, message):

timestamp = datetime.datetime.now().strftime("%Y-%m-%d %H:%M:%S")

with open(self.log_file, "a") as log_file:

log_file.write(f"[{timestamp}] {message}\n")

def chat_with_gpt_4o(self):

# Function to call GPT-4o with all messages

self.log_message("test")

response = chat_with_ai(self.chat_history)

return response

if __name__ == "__main__":

root = tk.Tk()

assistant = ChatAssistant(root)

root.mainloop()Talking to the AI

Once the chat window was ready, it was time to integrate the AI. The first step was adding the ChatGPT API, which turned out to be straightforward. I used dotenv to securely add the OpenAI key to the .env file, ensuring it doesn’t get pushed to GitHub.

ChatGPT also added some error handling, although these errors aren’t yet caught in the main process.

def chat_with_gpt(prompts, model="gpt-4o-mini"):

try:

logger.info(f"Starting OpenAI chat with model: {model}")

messages = [

{"role": "system", "content": "You are a helpful assistant."},

]

messages += prompts

logger.debug(f"Sending request to OpenAI with {len(messages)} messages")

response = client.chat.completions.create(

model=model,

messages=messages,

)

logger.info("Successfully received OpenAI response")

return response.choices[0].message.content

except openai.APIConnectionError as e:

logger.error(f"OpenAI connection error: {e.__cause__}")

raise

except openai.RateLimitError as e:

logger.error(f"OpenAI rate limit error {e.status_code}: {e.response}")

raise

except openai.APIStatusError as e:

logger.error(f"OpenAI status error {e.status_code}: {e.response}")

raise

except openai.BadRequestError as e:

logger.error(f"OpenAI bad request error {e.status_code}: {e.response}")

raise

except Exception as e:

logger.error(f"Unexpected error in OpenAI chat: {e}", exc_info=True)

raiseNext, I added the Claude API. This wasn’t as smooth. Despite using both ChatGPT and Claude for assistance, neither managed to generate working code. In the end, I used the official Claude API documentation to get it running—proving that sometimes, even AI needs a helping hand. 😉

Running Installs on Startup

I wanted Alfred to be as easy to run as possible—ideally, just double-click and go. To achieve this, I needed Python packages to install automatically when launching the script. I asked Claude for a quick solution, which wasn’t exactly elegant, but it gets the job done for now. This will be marked as a future TODO for optimization:

import subprocess

import sys

import os

# Install packages from requirements.txt if they are missing

requirements_file = "requirements.txt"

if os.path.exists(requirements_file):

with open(requirements_file) as f:

required_packages = f.read().splitlines()

for package in required_packages:

try:

__import__(package.split('==')[0]) # Import package (handles version specifiers)

except ImportError:

subprocess.check_call([sys.executable, "-m", "pip", "install", package])Challenges and Improvements Needed

- The chat window still needs refinement. When resized, the alignment of user text is lost, and when hitting enter, user input doesn’t clear until the assistant responds.

- Errors aren’t properly handled yet—if an error occurs, the program just stops.

- Neither Claude nor ChatGPT could generate working code for the Claude API, so I had to write it myself using the documentation.

- Conversations are stored in the main process, but they should be managed by the assistant to allow for multiple assistants with different tasks.

- Documentation and comments are currently lacking—something to revisit when time permits. (Though, as a true developer at heart, I reserve the right to avoid them. 😄)

Further Work and Additions

- Refactor project structure for better modularity.

- Update AI handling to enable agents with memory and different specialized functions.

- Add features to open applications on Windows.

- Introduce commands for managing development tools (open/close/add projects).

- Expand capabilities for research assistance.

If you’d like to contribute to this project, the code is available here: Alfred. Got any ideas for improvements? Leave a comment or reach out. I’d love to hear your input!

Schreibe einen Kommentar