Cost-controlled evaluations are reshaping how AI agents are benchmarked and developed, as highlighted by recent research from Princeton University (AI Agents That Matter). In an AI landscape often dominated by flashy, compute-intensive results, how can we ensure that these agents are truly efficient and practical for real-world use? This approach not only prevents misleading results from inflated compute resources but also promotes the development of more efficient and economically viable AI systems for real-world applications.

„Compound AI systems will likely be the best way to maximize AI results in the future, and might be one of the most impactful trends in AI in 2024.“ – Zahari et al. The Shift from Models to Compound AI Systems

Agentic AI Systems

In the rapidly evolving landscape of AI, agentic systems stand out due to their unique ability to operate independently while achieving predefined goals. These systems are becoming increasingly important as they embody the next step in creating AI agents capable of adapting to complex, real-world scenarios. For example, in healthcare, agentic AI systems can autonomously monitor patient data and adjust treatment plans in real-time, providing personalized care without constant human oversight. Agentic AI systems are characterized by their ability to act autonomously to achieve specific goals. Three key factors are used to classify AI agents as agentic:

- Autonomy: Agentic AI systems can make decisions and take actions independently, without constant human intervention. This autonomy allows them to navigate complex environments and solve problems on their own.

- Goal-oriented behavior: These systems are designed with specific objectives in mind. They can formulate plans and execute actions to achieve these goals, adapting their strategies as needed based on feedback and changing circumstances.

- Interaction with the environment: Agentic AI systems can perceive and interact with their surroundings, whether it’s a digital environment or the physical world. This interaction allows them to gather information, learn from experiences, and modify their behavior accordingly.

The degree to which an AI system exhibits these characteristics can vary, leading to different levels of agency. Understanding these varying levels is crucial for AI development, as it helps researchers and practitioners determine the most suitable type of agent for specific real-world applications, ensuring that the right balance of autonomy, goal orientation, and environmental interaction is achieved. For instance, some systems may have limited autonomy but strong goal-oriented behavior, while others might excel in environmental interaction but require more human guidance for decision-making.

It’s important to note that the classification of AI systems as agentic is not binary but exists on a spectrum. As AI technology advances, the boundaries between agentic and non-agentic systems continue to blur, presenting new challenges for evaluation and benchmarking.

AI Model Benchmarks

Several key benchmarks drive AI model and agent development, each designed to evaluate specific capabilities:

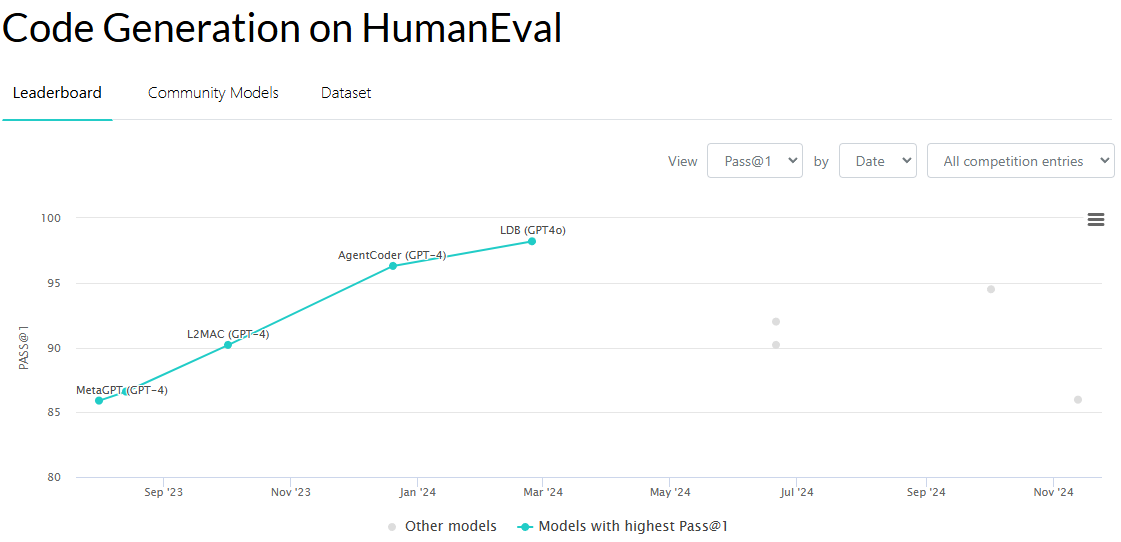

- HumanEval assesses code generation skills

- HotPotQA tests multi-hop question answering

- MMLU (Multi-task Language Understanding) covers knowledge across 57 subjects

- WebArena evaluates web interaction abilities

- NovelQA focuses on question answering tasks

These benchmarks play a crucial role in measuring progress and guiding research directions in AI. However, it’s important to note that relying solely on these metrics can lead to overfitting and may not always translate to real-world performance, highlighting the need for more comprehensive evaluation methods.

While benchmarks like HumanEval, HotPotQA, and MMLU are pivotal in driving AI agent development, they face significant challenges when applied to real-world scenarios. Many current benchmarks overly emphasize accuracy, leading to the development of unnecessarily complex and costly systems.

For instance, AlphaCode demonstrated how simply retrying solutions multiple times could inflate accuracy metrics, which ultimately leads to an overestimation of an agent’s true capabilities in practical settings.

These benchmarks play a crucial role in measuring progress and guiding research directions in AI. However, it’s important to note that relying solely on these metrics can lead to overfitting and may not always translate to real-world performance, highlighting the need for more comprehensive evaluation methods.

While benchmarks like HumanEval, HotPotQA, and MMLU are pivotal in driving AI agent development, they face significant challenges when applied to real-world scenarios. Many current benchmarks overly emphasize accuracy, leading to the development of unnecessarily complex and costly systems.

For instance, AlphaCode demonstrated how simply retrying solutions multiple times could inflate accuracy metrics, which ultimately leads to an overestimation of an agent’s true capabilities in practical settings.

Such approaches do not translate well into efficient real-world applications, where cost and resource use are key factors. Additionally, many benchmarks lack proper holdout sets, leading to issues with overfitting, which makes these agents fragile when faced with new and unpredictable tasks. Addressing these challenges is crucial for creating AI systems that are not just top-performers in controlled settings but are also robust and cost-effective in real environments.

Cost-Controlled Agent Evaluation

Cost-controlled evaluations are crucial for accurately assessing AI agent performance and practicality. By accounting for operational expenses, these evaluations prevent artificial inflation of performance metrics through increased compute resources.

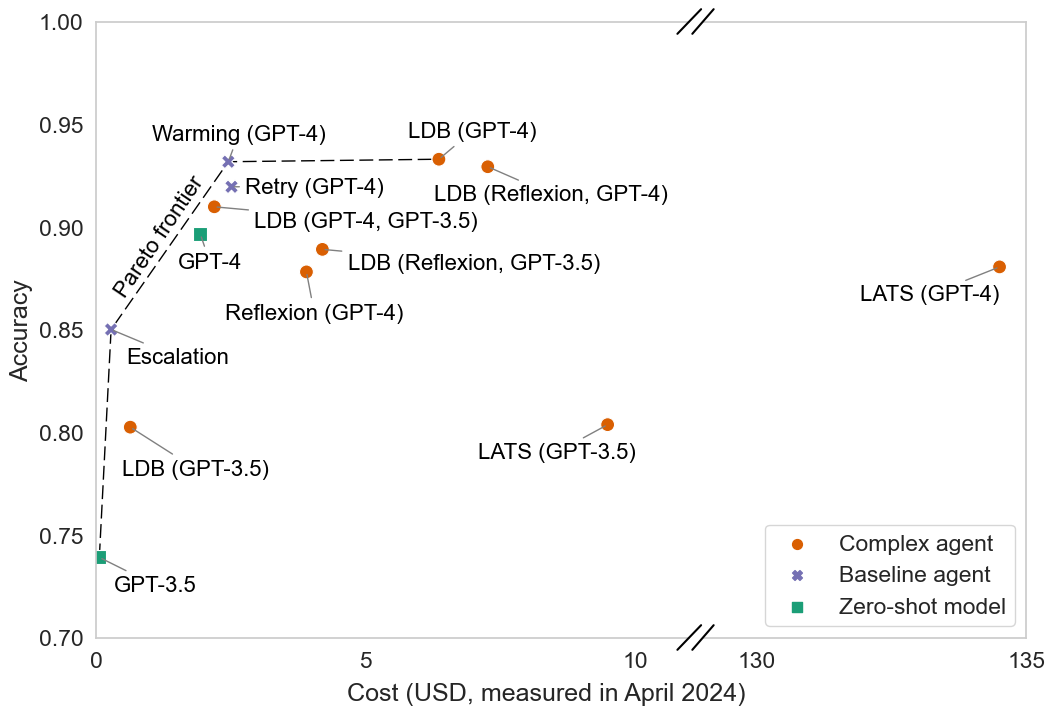

This approach encourages the development of more efficient agent designs that maintain accuracy while reducing costs, which is essential for real-world applications. Visualizing results as a Pareto curve of accuracy versus inference cost allows for joint optimization, revealing important trade-offs that might be obscured when focusing solely on accuracy.

For instance, the cost of running different agents with similar accuracy levels can vary by almost two orders of magnitude. This methodology also helps prevent overfitting to benchmarks and promotes the creation of standardized, reproducible evaluation practices across the AI research community.

Simple LLM Strategies vs. Agents

Recent research has challenged the notion that complex AI agents consistently outperform simpler language model strategies. In many cases, straightforward approaches using large language models (LLMs) with basic enhancements can achieve comparable or even superior results to more elaborate agent systems.Three key strategies have shown particular promise when applied to LLMs:

- Retry: Multiple attempts at solving a problem, selecting the best outcome

- Warming: Providing relevant context or examples before the main task

- Escalation: Gradually increasing model complexity or prompt sophistication

These techniques, when combined, often match or exceed the performance of more complex agent architectures across various benchmarks. This finding has significant implications for both researchers and practitioners in the field of AI.

The effectiveness of these simpler strategies highlights a crucial point in AI development: increased complexity does not always equate to better performance. In fact, the study from Princeton University demonstrates that many state-of-the-art (SOTA) agents are unnecessarily complex and costly. This overcomplication can lead to diminishing returns in terms of accuracy gains while significantly increasing computational expenses.

Moreover, the success of these simpler approaches challenges some prevailing assumptions in the AI community. It suggests that many of the perceived advantages of complex agent systems might be achievable through more efficient use of existing LLM capabilities. This realization could potentially redirect research efforts towards optimizing LLM usage rather than developing increasingly intricate agent architectures.

However, it’s important to note that this doesn’t negate the value of agent-based systems entirely. Certain tasks, particularly those requiring long-term planning or complex multi-step reasoning, may still benefit from more sophisticated agent designs. The key takeaway is the need for a more nuanced approach to AI system design, where the complexity of the solution is carefully balanced against the requirements of the task and the associated computational costs.

Conclusion: Real-World Agent Applications

As AI technology continues to evolve, the real-world applications of agentic systems are becoming increasingly evident. From autonomous decision-making to goal-oriented behaviors and complex environmental interactions, these systems are transforming industries ranging from healthcare and finance to logistics and customer service. The insights gained from cost-controlled evaluations and benchmark analyses are crucial in guiding the development of AI agents that are not only powerful but also economically viable for practical use.

The emphasis on balancing complexity, cost, and performance highlights the importance of a strategic approach to AI development. This balance ensures that AI systems are not only powerful but also practical, scalable, and capable of making a real impact across diverse industries. By leveraging both agentic systems and simpler LLM strategies where appropriate, researchers and practitioners can build AI solutions that are well-suited to their specific real-world challenges, ultimately driving innovation and efficiency in a sustainable manner.

If you’re interested in exploring how to apply these cost-controlled strategies and agentic systems in your own projects, or if you want to contribute to advancing AI efficiency, now is the perfect time to get involved. Collaborate with peers, experiment with new evaluation methods, and help shape the future of AI development.

Schreibe einen Kommentar